When no robots.txt file is added to the root of the WordPress website, WordPress will dynamically creates a virtual robots.txt file that contains basic directives. The virtual robots.txt file provided by WordPress can be viewed by visiting the following URL, where there is no physical robots.txt exists on the root directory:

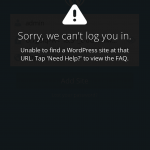

http(s)://your-domain-name.com/robots.txt

Currently, the WordPress includes the following directives in robots.txt by default:

User-agent: * Disallow: /wp-admin/ Allow: /wp-admin/admin-ajax.php

Robots.txt contains instructions and directives about the site known as The Robots Exclusion Protocol to web robots and bots so that only public web pages on the websites are crawled by the bots, while private pages that you want to hide from the world or other pages such as duplicate contents are not crawled.

The WordPress virtual robots.txt is disabled and overridden when a physical robots.txt exists in the root directory of the website. However, if you’re not a fan of creating and editing robots.txt file on the web server, WordPress core does provide a filter hook into robots.txt generation process, which allows editing and alteration to virtual robots.txt produced by WordPress without adding a physical file.

This tutorial provides the guide to use robots_txt hook in WordPress API to manipulate the virtual robots.txt dynamically. Of course there are plenty of plugins that are available to manage robots.txt in WordPress, but they may be bloated and overkill, and comes with unnecessary functions.

To append to the virtual robots.txt generated by WordPress, add the following PHP code into the active theme’s functions.php file:

function AddtoRobotsTxt($robotstext, $public) {

$robotsrules = "User-agent: GoogleBot

Allow: /";

return $robotstext . $robotsrules;

}

add_filter('robots_txt', 'AddtoRobotsTxt', 10, 2);To complete replace the content of robots.txt with own version of rules and instructions, add the following PHP code into the active theme’s functions.php file:

function ReplaceWPRobotsTxt($robotstext, $public) {

$robotstext = "User-agent: GoogleBot

Allow: /";

return $robotstext;

}

add_filter('robots_txt', 'ReplaceWPRobotsTxt', 10, 2);}After saving the change, visit your website URL appending with robots.txt (e.g. https://your-domain-name.com/robots.txt) to view the automatically generated robots.txt.